ChronoEdit

Ovládněte model ChronoEdit od NVIDIA. Zjistěte, jak provádět fyzikálně konzistentní úpravy obrázků, od pohybů kamery po manipulaci s objekty.

Co je ChronoEdit

ChronoEdit je specializovaný generativní AI framework vyvinutý společností NVIDIA a Univerzitou v Torontu. Představuje nový „hybridní“ přístup k úpravám obrázků tím, že k celému procesu přistupuje jako k úloze generování videa. Místo pouhého překrývání nových pixelů ChronoEdit chápe kauzální posloupnost událostí.

Například pokud po něm chcete, aby „přidal kočku sedící na lavičce“, nejdříve logicky vytvoří lavičku a až poté na ni umístí kočku. Tím napodobuje skutečné příčiny a následky. Díky tomuto „časovému uvažování“ model zachovává fyzikální detaily – jako jsou textury, záhyby nebo světlo – což z něj dělá cenný nástroj pro simulace, kde je důležitější dodržování fyzikálních zákonů než jen estetická stránka výsledku.

Technické specifikace modelu

Vlastnost | Specifikace |

|---|---|

Vývojář | NVIDIA & University of Toronto |

Licence | Povoleno komerční použití |

Rychlost | Pomalá až střední (vysoké nároky na výkon) |

Vstup | Pouze jeden obrázek |

3D povědomí | Vysoké (zachování struktury a textur) |

Nejlepší využití | Simulace fyziky, data pro robotiku, otáčení objektů |

Hlavní přednosti

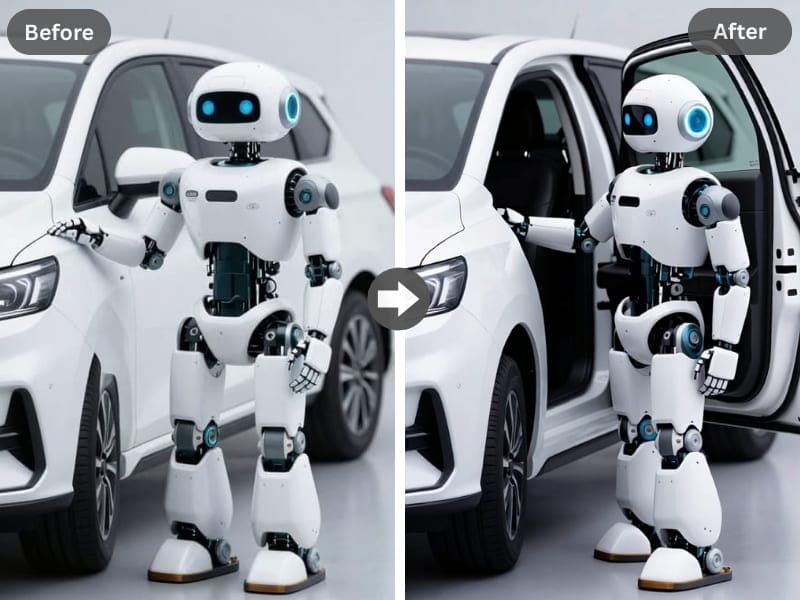

Kauzální uvažování a logika

Oproti běžným editorům, které obrázky pouze spojují, ChronoEdit chápe logickou posloupnost úprav. Zajistí, že přidané objekty přirozeně interagují se svým okolím.

Díky tomu dokáže model tvořit složité interakce – například robotickou paži uchopující předmět, auto brzdící na silnici – a rozumí fyzikálním důsledkům dané akce.

Porozumění 3D prostoru

Model má silný cit pro 3D strukturu. Při natáčení objektu – třeba když otočíte rytíře tak, aby se díval do kamery – ChronoEdit správně přegeneruje detaily povrchu, jako jsou loga nebo vzory na brnění, z nového úhlu. Zachovává objem a geometrii objektů, místo aby je zploštil.

Pokrčilé tipy a šablony pro zadávání

Kauzální pořadí

Protože model uvažuje v časových osách, zkuste zadání stavět podle skutečného pořadí dějů.

Šablona: „Nejdřív [pozadí/kontekst], pak [akce/interakce objektu].”

Příklad: „Parková lavička na slunci. Kočka vyskočí na lavičku a posadí se.”

Přesné natočení objektu

Pro složitější rotace buďte konkrétní ohledně cílového úhlu.

Šablona: „Otoč [objekt], aby se díval [směr]. Zajisti, že [detail] je viditelný.”

Příklad: „Otoč anime postavu čelem ke kameře. Zajisti, aby logo na tričku bylo zkreslené podle záhybů látky.”

Multimodální nákresy jako vstup

ChronoEdit umožňuje styl „Náčrt -> obrázek“. Stačí nahrát jednoduchý tužkový náčrtek a pomocí zadání ho převést do detailního stylu, například “japonská černobílá anime scéna”, přičemž model přísně zachová rozložení náčrtu.

Možnosti využití

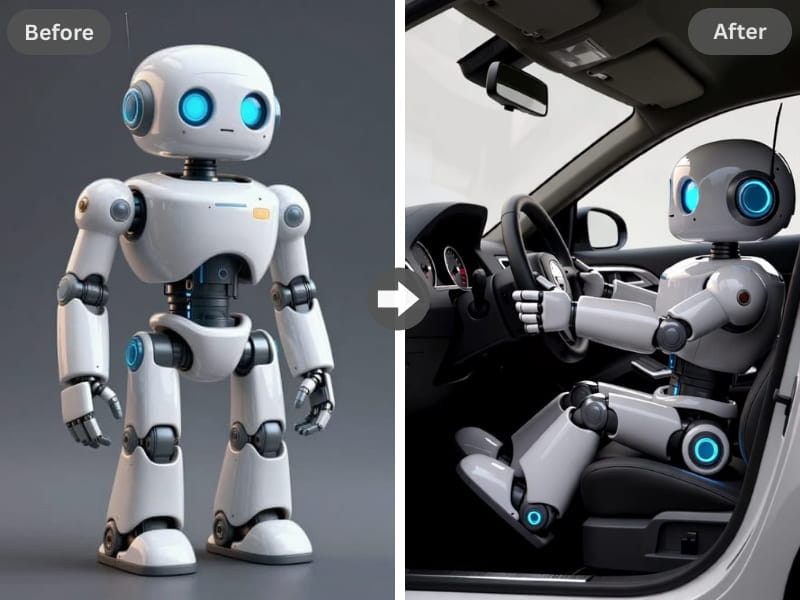

Simulace autonomního řízení a robotiky

ChronoEdit dokáže simulovat „nebezpečné situace“, které je těžké zachytit ve skutečnosti – například autonehody nebo nouzové brzdění. Díky respektování fyziky je cenným pomocníkem při tvorbě syntetických trénovacích dat pro autonomní systémy.

Přesné funkční úpravy

Model vyniká v chirurgicky přesných změnách. Umí například odstranit konkrétní předmět (třeba brýle z obličeje) bez toho, aby zdeformoval obličej, nebo přidat objekt (třeba červený kabát), který vrhá správný stín podle osvětlení ve scéně.

Koncept art a převod stylů

Designéři mohou ChronoEdit využít k proměně materiálu objektu – například změnit fotku kočky v „PVC figurku“. Přestože model směřuje spíš k realismu, zvládne i specifické styly (třeba malbu Gongbi) a zároveň zachová hlavní motiv.

Proč si vybrat Somake

Bez složité hardwarové přípravy

Spouštět tento model pro práci s videem lokálně je složité a na běžných grafických kartách pomalé. Somake nabízí okamžité a optimalizované prostředí – vyřeší vše za vás a vy se můžete soustředit jen na dokonalé zadání.

Stabilní prostředí pro generování

Nastavili jsme parametry generování tak, aby byly výsledky co nejspolehlivější. Optimalizací tokenů i počtu kroků v našem backendu nabízí Somake stabilnější zážitek s touto experimentální technologií.

Vše v jednom kreativním balíku

Získáte okamžitý přístup k celé sadě digitálních nástrojů, se kterými snadno vytvoříte profesionální obrázky, dynamická videa i poutavý text—vše v jednom přehledném a jednotném rozhraní.

Nejčastější dotazy

Ne, v tuto chvíli ChronoEdit podporuje pouze jeden obrázek jako vstup. Výsledný „cílový“ stav vytváří jen z tohoto jednoho zdroje a vašeho textového zadání.

ChronoEdit je speciální „hybridní“ model zaměřený na fyziku a kauzální logiku. I když Qwen nebo Flux mohou být lepší na obecné estetické úpravy, ChronoEdit vyniká tam, kde je potřeba 3D konzistence a fyzikální uvažování.

Model generuje sekvenci snímků jako video, ze kterých vypočítá finální obrázek. Tento proces je mnohem více náročný na výkon než běžné generování obrázků, ale zajišťuje plynulejší přechody a lepší dodržování fyziky.

Jedná se především o výzkumný model určený pro simulace a složité úpravy struktury. Pro jednoduché vyhlazení pleti nebo úpravu barev budou klasické nástroje rychlejší. ChronoEdit je nejlepší na změny obsahu nebo fyziky celé scény.

I když má určitou prostorovou představivost pro přegenerování log, není to specializovaný nástroj pro typografii. Generování textu může být méně přesné než u modelů trénovaných přímo na vykreslování písma.